Zen and the Art of Meaning

There are many accusations that we've become a throw-away society, with complex electronic items in particular being discarded for want of simple repairs. Treehugger reports that Americans collectively got rid of over 400 million electronic products in 2010, many of them still working. Due to the rapid development of technology, also demands from consumers for thinner and lighter electronics, many electronic devices are now not designed to be repairable, with even the battery sealed in. They end up being dumped, often in the Third World, as this story from Ghana vividly illustrates. This has obvious environmental implications, leading to calls from leading design agency IDEO to promote "what's old is new again", encouraging re-use, repair and recycling. Websites like Ifixit describe in detail how to repair many current devices, while admitting that some are effectively unrepairable.

So the will to repair and re-use is there, however, electronics will need to be designed to facilitate this, which could mean sacrificing slimness and lightness for repairability. This could become a selling point - Reynolds Valveart is a micro-business based in Australia, aiming to capture the "tone" of vintage guitar amplifiers. They claim this can only be achieved with valves, however they point out that their amplifiers are "infinitely repairable" using standard components. Dickinson Amplification in the UK make similar claims. This is where design meets art, not in the sense of superficial styling but at the heart of the very components that make these amplifiers give their distinctive tone. Repairability is also at the heart of these designs, they are made to be used and cherished for decades by performing musicians. Outside the field of electronics, designer-makers such as Sebastian Cox, make wooden furniture and light fittings that build sustainable design principles right into the products, not only in terms of using sustainable sources of timber but also in the sense of making the design and building process transparent with visible joints and what he calls "honest" construction.

Robert Pirsig explores these issues in his first book Zen and the Art of Motorcycle Maintenance: An Inquiry into Values, originally published in 1974. It documents in the first person a motorcycle journey made across America with his son and two friends. His reflections on attitudes to motorcycle repair led to the realisation that his travelling companions and even some repair technicians didn't want to involve themselves in the workings of the machine - they were "spectators". In contrast, he takes the time to learn the "personality" of his own machine and how to repair it. At first this distinction didn't seem too important, but on further reflection it became "huge". Pirsig saw beauty in the function of a piece of beer can that was ideal to repair the handlebars on his friend's motorbike but his friend only saw the fact that it was from a beer can and refused to use it. This incident symbolised the different visions of reality that they both had, with the narrator having a "classical" view of the world in terms of its function, in contrast to the more "romantic" view that sees the world in terms of outward appearance. The point that Pirsig was trying to make is that these world-views are not mutually exclusive, that it should be possible to reconcile them, that "the Buddha is everywhere", even the very much physical motorcycle is a realisation of a "mental phenomenon". However, his previous attempts at this reconciliation through the concept of "quality" had led to a nervous breakdown. There is much philosophising, far too much to cover here, but one concept that is relevant is that of "gumption", where the mechanic is at one with the machine, able to "feel" their way to a successful repair. Pirsig pointed out that a real craftsman works by the "material and his thoughts... changing together", just as an artist does. Peace of mind is key to developing this way of thinking, to be able to perceive the "goodness" that "unites" classical and romantic thinking "within a practical working context".

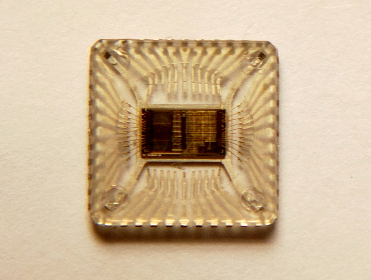

This relates to the concept of "focal practices" originated by Albert Borgmann in his 1984 book Technology and the Character of Contemporary Life. In contrast to the "device paradigm" that is becoming more prevalent in everyday life, where technology delivers services that people use but don't understand, focal practices are comprehensible and understandable, using a woodburning stove in comparison to the device of central heating. Considering these issues in the light of modern digital technology, its very complexity means that even experts can't interact with every component of a modern device such as a mobile phone. In contrast, a valve amplifier is made of components that can be soldered together in a simple workshop and the inner workings of the valves themselves are revealed through the glass envelope.

However, there is now a growing "maker" culture, as reported in Wired magazine in 2011, driven by low cost 3D printers and DIY electronics, such as a USB charger made out of a mints tin. Maker "faires" are springing up over the US and now in the UK as well. Two products in particular allow digital technology to become a focal practice, the Arduino microcontroller that allows hobbyists to interface a computer with a variety of sensors and the Raspberry Pi single board microcomputer. The potential applications of Arduino are showcased on their website including a "wearable soundscape". The process of working with Arduino can start with assembling the circuit board from its individual components, or taking involvement a step further, even etching and drilling the circuit board itself. The Arduino board can be programmed using a range of open source software tools that are freely downloadable. The Raspberry Pi Foundation is a UK registered charity, with the aim of making a low cost educational computer, that are now finding numerous applications in developing countries. Software available includes distributions of the Debian Linux operating system and there is a thriving user community including developing many case designs such as the Pibow.

Is there such a thing as sustainable design?

Design might be one of the most misused words in the English language, often taken to mean the external appearance of an object, as in "designer" clothes. The UK Design Council see design as making "ideas tangible" and it is what "links creativity and innovation" with a specific goal of human benefit. The design process is about thinking through making things with the end user in mind. The Design Council see design as a linear process of "Discover, Define, Develop and Deliver", but not everyone agrees with this model. Tim Brown, in his book Design Thinking, outlines "three spaces of innovation", Ideation, Inspiration and Implementation, which interact in a circular process. He also emphasises the importance of empathy in design, where designers can "imagine the world from multiple perspectives", inspiring designs that meet people's needs.

Donald Norman points out in his book The Psychology of Everday Things points out that many designs are difficult to use as they don't offer the right "affordances" for the user, such as a door with a handle that actually pushes open, leading to frustration as people try to pull it. Designers can learn a lot from the mistakes people make when trying to use existing designs. His thoughts on this lead to good design as being about:

1) Provide a conceptual model that makes sense to the user.

2) Make things visible so the user can understand the conceptual model and work with it.

Victor Papanek, in his book Design for the Real World, defines design as the "conscious effort to impose meaningful order", stressing the role of radical thinking to meet real needs, particularly in the Third World. An example of this is huts in the Third World made out of oil containers, a simple but radical innovation would be to make the containers more suitable as building materials. He highlights that the designer has a social responsibility to overcome the damage done by previous industrial designers in creating products that have not only harmed people but have damaged the environmental of "Spaceship Earth".

The Design Council are also big on "sustainable design" that they believe can "save the planet", which considers the triple bottom line of meeting social needs, minimising environmental impact and enabling business to make a profit. All this seems very reasonable, but the last criterion is where the problem lies, according to Joel Bakan in his book The Corporation, which was also made into a film. Its starting point is that the corporation is a "legal person", but subjecting it to a psychological assessment indicates that it has a psychopathic personality. This personality is built into the legal structure of the corporation, which mandates that its sole purpose is to create profit and increase its value to shareholders. Even if the corporation's directors wish to act in a more humane way, the wishes of shareholders, mediated through the organisational structure, won't let them. Bakan gives the example of the Body Shop, where the founder Anita Roddick realised that by selling stock to shareholders, she had "made a pact with the devil".

This means that the triple bottom line isn't sustainable at all, as long as there are corporations making a profit by any means possible, which can't be reconciled with environmental sustainability. Drawing on the pioneering work of E. F. Schumacher amongst others, Stuart Walker at Lancaster University redefined the concept of the triple bottom line to come up with the "quadruple bottom line" in his book The Spirit of Design, where social value, environmental care and personal meaning (or "spirituality") are underpinned by economic systems, which are simply a mechanism rather than an end in themselves. So sustainable design might be about looking at how organisations make wealth and interact with society.

It is possible to create successful organisations that aren't solely about making a profit for shareholders. One example is the Mondragon Corporation in Spain, which describes itself as the "world leader in the co-operative movement", working for the benefit of its members and for society in general. The organisation still has to compete with others who do not share its values, but by investing in their workers as their major asset, they secure a competitive advantage, thus succeeding in both business and human terms. Taking a different approach, Interface is a heavy industry company, making carpet tiles. Driven by the vision of its founder Ray Anderson, the company turned its vision to become completely sustainable in environmental terms by 2020, reconciling this with its duty to shareholders by having a longer term vision of continued profit achieved through sustainability. It aims to lead through example, influencing the industry to change its ways.

The Book Small Giants by Bo Burlingham highlights that companies can succeed in a competitive market by putting values before profit, by being the "best at what they do", including caring for employees. They are either privately owned by the founding entrepreneurs, or their investors share the values of the company. The key decisive moment in the history of these companies is when the owner decided not to sell out, but to concentrate on keeping their "soul" or "mojo". In the UK, the Government is promoting social enterprise as the future of public service delivery, even creating the Community Interest Company legal form specifically for social enterprise. The government funded agency Social Enterprise UK defines social enterprises as "businesses that trade to tackle social problems, improve communities, people’s life chances, or the environment", reinvesting surpluses into the local community. These examples illustrate a growing trend in society towards organisations that are sustainable in the wider sense of benefiting society and the environment by promoting social values. A common theme is that these organisations are driven by the personal values of their founders or investors.

The transformation of identity - who are we?

Peter Drucker defined innovation in a business context as "Innovation is the specific instrument of entrepreneurship... the act that endows resources with a new capacity to create wealth" in his 1985 book Innovation and Entrepreneurship. Innovation can take many forms, with incremental innovation being where a technology is improved without changing its fundamental character, such as desktop personal computers that have become thousands of times faster in the last 20 years, but are used for similar tasks. Disruptive innovation is where new technology, at first seen as a novelty, develops until it displaces existing established technology. An example of this is the digital camera, which in the late 1990s and early 2000s was an expensive toy used mainly for news gathering or by estate agents. In the mid 2000s, improvements to the technology enabled the digital camera to replace the compact film camera for everyday use to record family events, holidays etc. Clayton Christiansen, who introduced the concept of disruptive innovation, explains it in this video.

Eric von Hippel, in his freely downloadable book The Sources of Innovation, saw innovation as primarily coming from users of technology, who see problems with established processes and find the means to change them. Taking that thinking a step further, the authors of this academic paper, which can be freely downloaded, claim that innovation is key to "modernity", when human communities began to be more adventurous, breaking free of tradition to move forward towards a modern society. This entailed transformational innovation, described by Innovation Excellence as the "granddaddy of innovation". It was the transformational innovations of the telegraph, telephone, radio and finally the Internet that shaped the development of current Western society. Issues with how they are changing people's lives were identified by Marshall McLuhan in his 1964 book Understanding Media: The Extensions of Man, who pointed out the implications of people being connected at "electric speed" to a "sudden implosion" of information. He was referring to television and the telephone, but global electric communications have been available since the mid 1800s with the telegraph network. This image shows the international telegraph network in 1901, looking remarkably similar to the map of the main fibre-optic links now.

With the advent of international communications technologies, starting with the telegraph, then the telephone and radio, people were confronted with the knowledge that others throughout the world lived their lives very differently, with correspondingly different worldviews. This leads to the realisation that they too, could potentially choose their identities, lead a new life. Perhaps best known for advising the UK government on how to find a Third Way between socialism and capitalism, Anthony Giddens made a significant contribution to sociology from the 1970s to the 1990s, particularly on how communications technology not only changed how people lived their lives, but also their identity and perception of their place in the world. In his 1991 book Modernity and Self-Identity, Giddens introduced the concept of "reflexivity" as the key characteristic of "late modernity" to explain this phenomenon, where individuals have to ask themselves "What to do? How to act? Who to be?", prompted by a mass media that provokes self-questioning. Many turn to self-help books, hoping for a transformation, but they generally don't work, perhaps because really changing your life is hard work. These books and similar life-changing attempts are part of the process that Giddens pointed out, that identity becomes more about what he called a person's "biography", a narrative that integrates their remembered past and anticipated future. Social networking now offers new tools to construct this biography, as the recent story from the BBC about "girlfriends for sale" to show off on Facebook profiles indicates.

Even further than that, people can now participate in virtual worlds, with Farmville perhaps being the best known. It is an online game by Zynga, with the current Farmville 2 showing an idealised country landscape that players have to "farm", plant crops, water them, harvest them, look after the chickens etc. They can sell their "produce" to other players to earn "coins", which can be used to buy more produce, animals or land. Entire websites are devoted to offering hints and tricks to playing Farmville. According to Wired, Farmville is a "self-sustaining social system", cunningly designed to hook players in, either spending money or "trading" with their Facebook friends. Short timescales, like crops which grow within hours, encourage continued involvement in the game, together with it promoting a "sense of peace", a break from the real world. The success of this game leads to statistics such as in the US, virtual farmers in Farmville outnumber real farmers in the US by 30 to 1, collectively spending 70 million hours each week playing the game, as this infographic shows. Reassuringly, some people find Farmville just too much like hard work, preferring to go out and participate in the real world.

Alone together - the digital panopticon?

![]()

The moment that computing becoming part of everyday life could be when the Apple iPhone was released in 2007. Despite issues at launch, the device was a runaway success, as it put easy to use computing power and wireless connectivity in the pockets of millions for the first time. Later versions improved on the first model with hundreds of thousands of "apps" now available. Although there had been previous devices such as the Blackberry, the Nokia Communicator series (arguably the first "smartphone") and various Windows Mobile handhelds, these were aimed more at business rather than the consumer market. The success of the iPhone and rival devices has led to over 1 billion people in the world now owning a smartphone.

However, the MIT professor and writer Sherry Turkle has identified concerns with how the use of mobile communications technology is changing how people interact with each other. Essentially her findings are that mobile technology makes communication easier and more convenient but paradoxically isolating, leading to people feeling "alone together". She has also identified worrying signs of addiction to the use of mobile devices, this could be due to them allowing constant interaction while denying the satisfaction of face to face communication. Research at the University of Maryland confirms these fears. These issues with addiction to technology were explored over a century ago in E. M. Forster's novella 'The Machine Stops' originally published in 1909, where many of the characters did not survive the machinery powering their artificial environment coming to an abrupt halt. The beginning echoes Turkle's concerns, as the main character's son is complaining about not being able to see her in person but only through electronic communications devices. These devices also resulted in information overload, with thousands of "friends", who she had never met in person, all demanding attention at once.

The popularity of the social networking site Facebook highlights this issue. Established in 2006 and now boasting over a billion members, it could now be becoming the "ambient background of the internet", increasingly integrated with many other websites. Not only that, but Facebook and other social networking sites now seem to be becoming integrated into everyday life, with employers now routinely looking at the online profiles of applicants while considering job applications (some have even demanded login details, despite this being against the terms of service). Andrew Keen, in his book Digital Vertigo, compares this to the "panopticon", or "inspection house" created by Jeremy Bentham, where people were continuously visible while remaining alone, thus promoting working more effectively. As services such as Google Now rely on location and context awareness, users may now feel that they are being watched by an electronic panopticon. However, people are starting to fight back, with CNN reporting in 2012 that leaving Facebook is a growing trend, with some claiming that participating in the social network was making them feel more self-conscious, competing with others to show who had the more exciting lives. The writer Jonathan Franzen highlights how the real world can eclipse the online world of "likes", while the Anti-Facebook League of Intelligentsia describes itself as the "The First Organized American Protest Against Facebook".

The recent limited release of prototypes of Google Glass threaten to make science fiction a reality, particularly William Gibson's Virtual Light, by superimposing an augmented reality onto everyday life. However, the included camera is causing consternation amongst privacy advocates as it can not only identify what the wearer of the Glass device is doing and where they have been, but also any passers-by through facial recognition. This is an example of the consequences to privacy of computing devices becoming ever smaller, others include heart monitors that could report back to health insurers and cars reporting their owner's driving habits. Every Web user is already adding to comprehensive information that is accumulating on them, unless they take careful precautions. This loss of privacy has deeper implications than facts that people might not want others to know about getting out, in that their behaviour could change in anticipation, becoming more controlled, more what they would like others to see. Bruce Schneider pointed out in a 2006 Wired article that privacy is a "basic human need" for the development of individuality, while this article debunks the often said statement "if you've got nothing to hide, you have nothing to fear", while Professor Daniel Solove offers a more academic analysis. Nicholas Carr goes further, claiming that "human beings are not just social creatures; we're also private creatures", with privacy being the "skin of the self", without which one's personality will wither away.

The disappearing computer

Electronic digital computers were originally the size of a room, powered by glowing valves. One of these has been recently restored, the Harwell Dekatron at the UK National Museum of Computing. With the invention of the transistor in 1947, followed by the microprocessor in 1971, computers became smaller and more powerful, to the point in the early 1980s when every home or office could have their own. The process of development continued, however, with microcomputers finding their way into many everyday objects. The modern car now has up to 100 Embedded Control Units depending on millions of lines of software code. Predictions were made over 20 years ago that eventually computers would become so persuasive that they would change everyday life and the fabric of society, first in science fiction then by academics in the field. Mark Weiser's ground-breaking paper "The Computer for the 21st Century", published in 1991, predicted that computers would "vanish into the background", with people being unconscious of their existence in the same way that they are of the printed word which is ubiquitous in man-made environments. This is an academic paper, but a draft can be freely downloaded. He makes a comparison with electric motors which have enabled the progression in a century or so from a single engine powering a whole factory to everyday objects containing many small motors each of which serve a specific purpose.

Ubiquitous computing is now a reality of life, where miniaturised microprocessors can interface with the outside world through the Internet. This leads to the Internet of Things, allowing everyday objects to take on their own unique identity and have a presence on the World Wide Web. This potentially allows a transformation of their meaning, as not only people but objects can become a part of the Web. A key enabling factor is the change in the Internet Protocol from version 4 (IPv4), which allows for over 4 billion addresses (enough for the initial development of the Internet but now running out) to version 6 (IPv6), which potentially allows every object on earth to have its own unique Internet address. The company Evrythng is now exploiting this, allowing manufacturers of objects to embed digital information or Web links, which can be used for personalised marketing. At the same time, the company making the objects can collect a wealth of information on their customers.

Existing objects can be personalised with the openly available Cosm (formerly Pachube) Application Programming Interfaces (APIs). One example of the application of this technology, the Good Night Lamp, illustrates Mark Weiner's point about the technology disappearing. The Big Lamp is in the shape of a house, when it is switched on, any smaller Little Lamps linked to it anywhere in the world will also switch on. In the last few years, the convergence of small, cheap microprocessors and fast wireless Internet has led to a range of innovative applications of ubiquitous computing. Lancaster University is a pioneer in this field, with John Hardy's projects to create projected interactive displays gaining particular attention, as using his software code, they can be set up with a Windows PC, a projector and a Microsoft Kinect. The Kinect was originally developed for the Xbox 360 games console, enabling players to use natural gestures and spoken commands, however with both Windows and open source software available, it is now being used for a range of other purposes.

Ubiquitous computing is not an unmixed blessing, however, as the proliferation of connected devices and the exchange of data between them raises issues about energy and resource consumption. This infographic vividly illustrates the problems, with the amount of data projected to quadruple from 2012 to 2016 in Europe while data centres in the United States already use 2% of the nation's electricity. Much of this is wasted, due to outdated practices of leaving even dormant servers on all the time in case they crash, leading to at best only 12% of the energy the data centre consumes being used to do useful work. The writer Adam Greenfield has explored the issues with ubiquitous computing in his book 'Everywhere', published in 2006, which highlights how computing can be embedded in the built environment and in everyday objects. However, this raises questions about privacy through "seamless" systems that act without user intervention or knowledge, gathering information that could undermine an individual's ability to manage their presentation of self, effectively being in public at all times. He calls for this technology to be used with caution, pointing out that many aspects of life will not be improved by ubiquitous computing.

Computing in the cloud - out of sight out of mind?

Cloud computing is becoming part of everyday life, with its move into the mainstream marked by the release of Microsoft Office 365, which is promoted as a tool for not only creating documents but for sharing and collaboration worldwide. Sign up for the service, get on with your work and your documents are magically accessible wherever you go. It's a tempting prospect, especially to anyone who has been meaning to back up their data "sometime", then lost vital documents or precious memories to computer failure. Cloud computing represents a shift from the individual or corporation being responsible for their own computer systems and data storage to outsourcing this responsibility to service providers that offer reassuring figures for uptime and guarantees against data loss (but possibly not reassuring enough). For a growing company, cloud computing offers the potential to scale up their computing requirements immediately as demand grows, giving them a competitive advantage. Taking the process even further towards "computing as a utility", software applications can now be accessed through the Web, dealing with licensing and installation issues. For example, Amazon is a major provider of cloud computing services, offering virtual servers that used by many big businesses. Google is developing a business model around cloud computing, with its low-cost Chromebooks, a laptop replacement that can only access Google services, and its high-end Pixel laptop that comes with 1TB of on-line Google Drive storage space. A major advantage of cloud computing is that it enables open data, allowing new combinations of existing data from a number of sources, which could lead to new findings. The Guardian Open Platform is an an example of open data, allowing "partners" to both use and contribute to the Guardian's information resources through publicly available Application Programming Interfaces, allowing a range of visualisations of current issues. One of these is Nukeometer, giving the (worrying) number of nuclear warheads within range of any location.

However, there are potential problems with cloud computing, including security of data, technical problems hindering access to the service and the requirement to have an Internet connection at all times. However, there is the option of becoming one's own cloud provider, which could offer more control over data. Some technical knowledge is needed, but the process is straightforward, with Lifehacker claiming a personal cloud service can be set up in five minutes provided you have a server or website available. On a commercial level, various providers including Ubuntu offer private cloud services.

Cloud computing has been promoted as more environmentally friendly, with the charity Carbon Disclosure Project (CDP) issuing a press release claiming that the UK economy could save £1.2 billion in energy costs and nearly 10 million tons of carbon dioxide by 2020 by switching to cloud computing. However, cloud computing relies on data centres to hold the growing volume of information and deliver the connectivity that businesses are relying on, which are causing their own problems. The New York Times points out that the proliferation of data centres in what was rural farmland in the state of Washington is contributing to air pollution from diesel backup generators. Attempts are being made to deal with this issue, with a major data centre for Facebook being located near the Arctic Circle to provide natural cooling. In contrast, Apple have recently turned to solar power to power a data centre in North Carolina.

The development of centralised computing resources accessible through the Internet made possible the concept of open source software, such as the Linux operating system and the LibreOffice software suite. One of the pioneers of open source, Eric Raymond, talks about "hacking" and its early development, outlining how "process transparency" through a "bazaar" enables software to be refined through a process of continual peer review, benefiting from the wisdom of crowds. The Internet makes this possible, allowing a central software repository to be accessed by contributors worldwide, with the whole process overseen by a single individual or group, acting as an "architect", with Linus Torvalds being the most well known as the architect of the Linux operating system. Originally developed as a hobby project in 1992, Linux is now comparable to Windows or Mac OS, overseen by the Linux Foundation. The principles of open source are now being applied in other areas, including crowdsourcing of product development and in the development of Wikipedia, a user contributed online encyclopedia established in 2001. Wikipedia has become so successful that, by 2005, it was already seen as as accurate as the long-established Encyclopedia Britannica, despite criticism of how it operates. Taking on-line collaboration even further, while the UK Conservative Party has been talking about Open Source Planning, Don Tapscott claims that the Internet can enable co-operation to solve global problems such as poverty through enabling "citizen voices" to be heard by global policy makers.